This is a call for proposals for a workshop on user-centred interactions with the internet of things at Nordichi 2006, October 14 and 15, 2006 in Oslo, Norway.

The user-centred Internet of Things

The so-called ‘Internet of Things’ is a vision of the future of networked things that share a record of their interactions with context, people and other objects. The evolution of networking to include objects occupying space and moving within the physical world presents an urgent design challenge for new kinds of networked social practice. The challenge for design is to overcome the current overarching emphasis on business and technology that has largely ignored practices that fall outside of operational efficiency scenarios.

What is imminently needed is a user-centred approach to understand the physical, contextual and social relationships between people and the networked things they interact with.

The mobile device as early enabler

The mobile phone is likely to play a key role in the early adoption of the internet of things. Mobile devices offer ubiquitous networks and interfaces, enabling otherwise offline objects at the edges of the network. Near Field Communication (NFC: http://www.nfc-forum.org/aboutnfc/) is a mobile technology that has been designed to integrate networked services into physical space and objects. NFC introduces a sense of ‘touch’, where interactions between devices are initiated by physical proximity.

In use, the mobile phone brings with it a history of personal and social activities and contexts. It is in this evolution that we see user-agency and social motivation emerging as an interesting area within the internet of things.

Workshop goals

In this workshop we intend to build knowledge around the hands-on problems and opportunities of designing user-centred interactions with networked objects. Through a process of ‘making things’ we will look closely at the kinds of interactions we may want to design with networked objects, and what roles the mobile phone may play in this.

We will focus on the design of simple, effective and innovative interactions between mobile phones and physical objects, rather than focusing on technical or network issues.

The primary questions for the workshop are:

What kinds of common interactions will emerge as networked objects become everyday?

What role will the mobile phone have to play in these interactions?

How do we encourage playful, experimental and exploratory use of networked things?

Some secondary questions are:

What interaction models can we bring to the internet of things? Do the fields of embodied interaction, tangible, social, ubiquitous or pervasive computing cover the required ground for designers?

What new kinds of social practices could emerge out of the possibilities presented by networked things?

How will the physical form of everyday objects and spaces be transformed by networks and near field interactions? How this would be reflected in users’ behavior?

How can the design of physical objects help in overcoming potential information or interaction overload, and how does search or findability change when in a physical context?

How can we move beyond commonsensical features such as object activation or findability?

What kind of user-communities will co-opt the technology and how will they hack, adjust and re-form it for their needs?

Workshop structure

Each workshop day will begin with a keynote presentation from invited experts. On the first day, participants will each give a short presentation of their position paper, no longer than 5 minutes.

Then groups of 3-4 people, each with different skills and backgrounds will then work on concepts, scenarios and prototypes. Prototypes may take the form of physical models, scenarios or enactments. We encourage the use of our wood, plastic and rapid prototyping workshops to create physical prototypes of selected concepts. We will provide workshop assistants for the creation of physical models.

Outcomes

The outcomes should be in a range of implementation styles allowing for a variety of outputs that speaks to a wide audience. A report will be written on the workshop, and published on the Touch project website and in other relevant channels.

Call for participation

The workshop is open to participants from human factors, mobile technology, social science, interaction and industrial design. Practitioners and those with industrial experience are strongly encouraged. Prior research work on embodied interaction, social and tangible computing would be particularly relevant. Participants will be selected based on their relevance to the workshop, and the overall balance of the group. Space is limited to 25 participants.

Call for short position papers

Application is by position paper no longer than two pages. The position paper can be visual or experimental in design and content. The themes should cover an issue that is relevant to the design of interactions with everyday objects.

Deadline for papers is 1 August, selected participants will be notified on the 9 August. The workshop itself is October 14 and 15, 2006.

Papers and any questions should be submitted to timo (at) elasticspace (dot) com before 1 August.

Organisers

Timo Arnall is a designer and researcher at the Oslo School of Architecture & Design (AHO). Timo’s research looks at practices around ubiquitous computing in urban space. At the moment his work focuses on the personal and social use of Radio Frequency Identification (RFID) technologies, looking for potential interactions with objects and city spaces through mobile devices. Previously his research looked at flyposting and stickering in public space, suggesting possible design strategies for combining physical marking and digital spatial annotation. Timo leads the research project Touch at AHO, looking at the use of mobile technology and Near Field Communication.

Julian Bleecker is a Research Fellow at the University of Southern California’s Annenberg Center for Communication and an Assistant Professor in the Interactive Media Division, part of the USC School of Cinema-Television. Bleecker’s work focuses on emerging technology design, research and development, implementation, concept innovation, particularly in the areas of pervasive media, mobile media, social networks and entertainment. He has a BS in Electrical Engineering and an MS in computer-human interaction. His doctoral dissertation from the University of California, Santa Cruz is on technology, entertainment and culture.

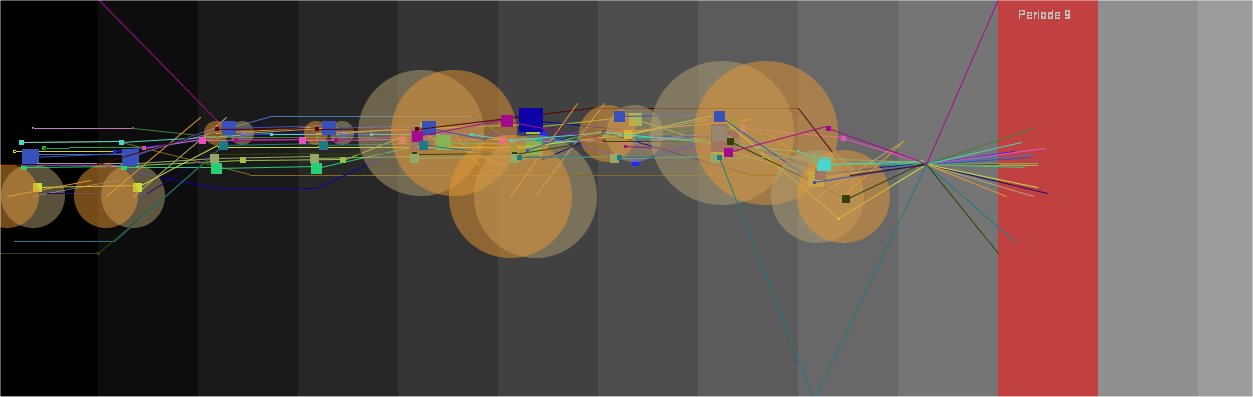

Nicolas Nova is a Ph.D. student at the CRAFT (Swiss Federal Institute of Technology Lausanne) working on the CatchBob! project. His current research is directed towards the understanding of how people use location-awareness information when collaborating in mobile settings, with a peculiar focus on pervasive games. After an undergraduate degree in cognitive sciences, he completed a master in human-computer interaction and educational technologies at TECFA (University of Geneva, Switzerland). His work is at the crossroads of cognitive psychology/ergonomics and human-computer interaction; relying on those disciplines to gain better understanding of how people use technology such as mobile and ubiquitous computing.